Handwritten Digits Classification

Contents

Handwritten Digits Classification#

In this notebook, we will learn to — actually, we will make a neural network learn to — classify handwritten digits. This is a classic problem for machine learning, and has become a benchmark for various machine learning algorithms. The database is called “MNIST” (“Modified National Institute of Standards and Technology” database), created by LeCun et al. in 1989. It contains handwritten digits scanned from postal zip codes (the “images”) and the correct numbers they represent (the “labels”). There is a training dataset of 60,000 images, each 28x28 pixels, and a testing dataset of 10,000 images.

The goal is for the network to process an image and recognize the number it is supposed to represent. Since there are ten possible numbers, 0–9, we basically have to classify the images into 10 categories. This is a so-called “classification” problem. It belongs to “supervised learning” because, during training, both the raw images and the correct labels are given to the network. After training, the network will be tested by evaluating its accuracy on the testing dataset, which it will not have seen.

We will use a multi-layer feed-forward network for our purpose. In particular, we will use a convolutional neural network (CNN), which contains convolutional layers as its first few layers. A convolutional layer detects local features in a 2D image. Traditionally, visual features can be edges, curves, etc. For the neural networks, the features are not prescribed, but trainable. A feature detector takes a portion of the image and convolute it with a particular filter (or “kernel”) to find whether the feature is present in this portion of the image. Using such feature detectors help the network utilize spatial structures that exist in the image, instead of naively flattening the image to a vector like we did in the previous classes.

Load data#

For the purpose of constructing and training a convolutional neural network, we will use the Keras package, which provides an interface to several common machine learning libraries and is very easy to use.

import numpy as np

np.set_printoptions(suppress=True)

import matplotlib.pyplot as plt

from tensorflow import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Convolution2D, Dense, Dropout, Flatten, Input, MaxPooling2D

from keras.utils import to_categorical

2024-04-04 10:34:12.348124: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-04-04 10:34:12.426629: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2024-04-04 10:34:12.426641: I tensorflow/compiler/xla/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2024-04-04 10:34:12.920901: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer.so.7'; dlerror: libnvinfer.so.7: cannot open shared object file: No such file or directory

2024-04-04 10:34:12.920941: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libnvinfer_plugin.so.7'; dlerror: libnvinfer_plugin.so.7: cannot open shared object file: No such file or directory

2024-04-04 10:34:12.920946: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

The Keras package already has the MNIST dataset that we will use, so we simply need to load it.

(train_images, train_labels), (test_images, test_labels) = mnist.load_data()

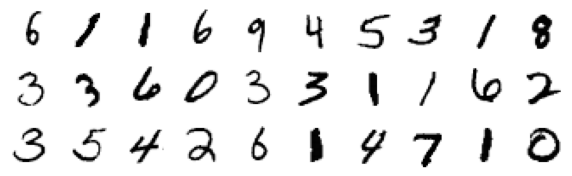

Let us first plot a few examples from the training images.

# plot some random images from training data

size = (10,3) # plot 10x3 images

fig, ax = plt.subplots(size[1], size[0], figsize=size)

ax = ax.flatten()

for i in range(np.prod(size)):

r = np.random.randint(train_images.shape[0]) # pick a random image

ax[i].imshow(train_images[r], cmap='Greys') # plot image

ax[i].axis('off')

plt.show()

As mentioned above, each image is 28x28 pixels, in grayscale. That is, each image is represented by a (28,28) array, each entry being an integer between 0 and 255 (0 is white and 255 is black). We will normalize the pixel values by 255, so that the input units of the network take values between 0 and 1. We also have to convert the images to shape (28,28,1), the last axis being the number of “channels”. The input images have only 1 channel because they are grayscale (whereas a color image would have 3 RGB channels). In the convolutional layers after the input layer, we will have more channels corresponding to the number of features/kernels.

Each image is associated with a label, which is an integer between 0 and 9. Instead of representing the label by a single variable that can take one of ten values, we will use the “one-hot” encoding, which represents the label by a 10-dimensional binary vector. Thus, when the number is 0, the vector is (1,0,0,…); when the number is 1, the vector is (0,1,0,…); and so on. These will be the target outputs of the network. Accordingly, the output layer of the network should have 10 units, which take values between 0 and 1, as we will see below.

# convert data to appropriate form

X_train = train_images.reshape(-1, 28, 28, 1) / 255

X_test = test_images.reshape(-1, 28, 28, 1) / 255

Y_train = to_categorical(train_labels, 10)

Y_test = to_categorical(test_labels, 10)

Train network#

Now we are ready to construct the network. We will add layers one by one, starting from the input layer.

The first layer is a convolutional layer with 16 feature detectors (filters=16). Each feature is calculated on a 8x8 window of the original layer (kernel_size=8). We will scan the image by moving the window 2 pixels at a time in both directions (strides=2); note that the windows will overlap. We will use a nonlinear activation function, \(g(x) = [x]_+\), the so-called “rectified linear” units (activation='relu'). This layer will have 11x11x16 = 1936 units.

The second layer is also a convolutional layer, with 8 feature detectors, each calculated from a 5x5 portion of the first layer. We will again use a stride of 2 and rectified linear units. This layer will have 4x4x8 = 128 units.

The result of the second layer will then be flattened to a 1D vector, forming a layer of 128 units. This is done because the spatial structures in the original images have already been captured by the convolutions, so we can disregard the spatial arrangement of the units from here on. The flattening is done by adding a nominal “flatten” layer, which does not incur new parameters.

After that, we have two more layers, which are usual “dense” layers just like in a multi-layer perceptron. One layer has 32 units, and uses rectified linear units.

The final layer has 10 units, since our output should be a 10-d vector. This last layer uses the “softmax” activation function, \(g(\mathbf{x})_i = \mathrm{e}^{x_i} / \sum_j \mathrm{e}^{x_j}\), so that the values are not only between 0 and 1, but also sum to 1. These values can be thought of as the predicted probability that the image belongs to each of the 10 classes.

This completes the network architecture. The full code is collected below. (Note that, to make the network more “robust”, we can add a few intermediate layers to “trim” the existing layers. This generally improves the performance of the network. It can be done by uncommenting the lines in the code below. For simplicity, we do not have to include them.)

# define model architecture

model = Sequential()

model.add(Input(shape=(28,28,1)))

model.add(Convolution2D(filters=16, kernel_size=8, strides=2, activation='relu'))

model.add(Convolution2D(filters=8, kernel_size=5, strides=2, activation='relu'))

# model.add(MaxPooling2D(pool_size=(2,2)))

# model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(units=32, activation='relu'))

# model.add(Dropout(0.5))

model.add(Dense(units=10, activation='softmax'))

2024-04-04 10:34:15.505874: W tensorflow/compiler/xla/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2024-04-04 10:34:15.505892: W tensorflow/compiler/xla/stream_executor/cuda/cuda_driver.cc:265] failed call to cuInit: UNKNOWN ERROR (303)

2024-04-04 10:34:15.505906: I tensorflow/compiler/xla/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (PHYSICSD2328A): /proc/driver/nvidia/version does not exist

2024-04-04 10:34:15.506247: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

We then compile the network by assigning a loss function (loss='categorical_crossentropy') and a method for accelerating the update rules (optimizer='adam'). The structure of the network can be viewed by printing the model summary.

# compile model

model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])

# print model information

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 11, 11, 16) 1040

conv2d_1 (Conv2D) (None, 4, 4, 8) 3208

flatten (Flatten) (None, 128) 0

dense (Dense) (None, 32) 4128

dense_1 (Dense) (None, 10) 330

=================================================================

Total params: 8,706

Trainable params: 8,706

Non-trainable params: 0

_________________________________________________________________

You should be awed by the large number of parameters in the model. The fact that we can train a network to fit so many parameters without overfitting is really a mystery in the field of “deep learning”. There have been theoretical developments to understanding this property (the so-called “generalization problem”), but the problem is far from being settled.

Nevertheless, let us go ahead to train our network and see what we get. We have 60,000 training images, but we should not feed them all at once to the network. Instead, we group them into batches of a smaller size (batch_size=100), and present one batch at a time. Also, we will present the training data multiple times (epochs=10). The accuracy of our network evaluated on the training data will improve over the epochs.

# fit model on training data

model.fit(X_train, Y_train, batch_size=100, epochs=10, verbose=1)

Epoch 1/10

600/600 [==============================] - 3s 4ms/step - loss: 0.3977 - accuracy: 0.8801

Epoch 2/10

600/600 [==============================] - 2s 3ms/step - loss: 0.1410 - accuracy: 0.9585

Epoch 3/10

600/600 [==============================] - 2s 3ms/step - loss: 0.1041 - accuracy: 0.9685

Epoch 4/10

600/600 [==============================] - 2s 3ms/step - loss: 0.0835 - accuracy: 0.9753

Epoch 5/10

600/600 [==============================] - 2s 3ms/step - loss: 0.0713 - accuracy: 0.9789

Epoch 6/10

600/600 [==============================] - 2s 3ms/step - loss: 0.0633 - accuracy: 0.9807

Epoch 7/10

600/600 [==============================] - 2s 4ms/step - loss: 0.0563 - accuracy: 0.9829

Epoch 8/10

600/600 [==============================] - 2s 4ms/step - loss: 0.0503 - accuracy: 0.9843

Epoch 9/10

600/600 [==============================] - 2s 3ms/step - loss: 0.0465 - accuracy: 0.9856

Epoch 10/10

600/600 [==============================] - 2s 3ms/step - loss: 0.0429 - accuracy: 0.9869

<keras.callbacks.History at 0x7fa5cc28f390>

It looks like we have achieved an accuracy of nearly 99% towards the end of the training. Note that this is the accuracy on the training data that the network has already seen. It does not get to 100% because the network is not merely remembering all correct labels, but trying to come up with a “reduced” model (with many parameters though!) to predict the labels. To evaluate the accuracy in an unbiased way, we must test the network on a different dataset that it has not seen before. That is why we will use the testing dataset to evaluate the network.

# evaluate model on testing data

model.evaluate(X_test, Y_test, verbose=1)

313/313 [==============================] - 1s 1ms/step - loss: 0.0489 - accuracy: 0.9850

[0.04886291176080704, 0.9850000143051147]

The network still performs pretty well!

Recognize digits#

Now that we have a working network, we can draw a digit ourselves and ask the network to recognize it. First, you have to save your drawing as a grayscale image of 28x28 pixels. Make sure that it has shape (28,28) and values between 0 and 1. Let us plot the image.

# load and show new drawing

drawing = plt.imread('source/7times.png')

plt.figure(figsize=(1,1))

plt.imshow(drawing, vmin=0, vmax=1, cmap='Greys')

plt.axis('off')

plt.show()

Before we feed the drawing to the network, we have to convert it to shape (:,28,28,1). The last axis means again that we have a grayscale image with only one channel, and the first axis represents how many images we want to present. We will present only one, but you can do many at a time by collecting them in an array.

# predict class of new drawing

drawing = drawing.reshape((-1,28,28,1))

output = model.predict(drawing)

print(output)

1/1 [==============================] - 0s 60ms/step

[[0.00000055 0.00000131 0.00089814 0.00010516 0. 0.00000107

0. 0.99896276 0.00002128 0.00000971]]

Note that the output of the network is a 10-d vector, which represents the network’s “confidence” that the image belongs to each class. To convert that to a digit, simply choose the most likely class.

digit = np.argmax(output, axis=1)

print(digit[0])

7

And that is correct!